Light Fields

Capturing both the spatial and angular information of the scene

Light fields are a powerful tool for capturing and rendering complex scenes. They are a 4D representation of the 3D world, capturing both the spatial and angular information of the scene. This allows for a wide range of applications, including rendering, refocusing, and virtual reality.

What is a Light Field?

Light fields, or plenoptic functions, capture the radiance (measured in watts per steradian per square meter) along every ray in a 3D space, offering a comprehensive description of light's behavior in a scene. They transcend traditional photography by encapsulating the directionality of light, not just its intensity at various points.

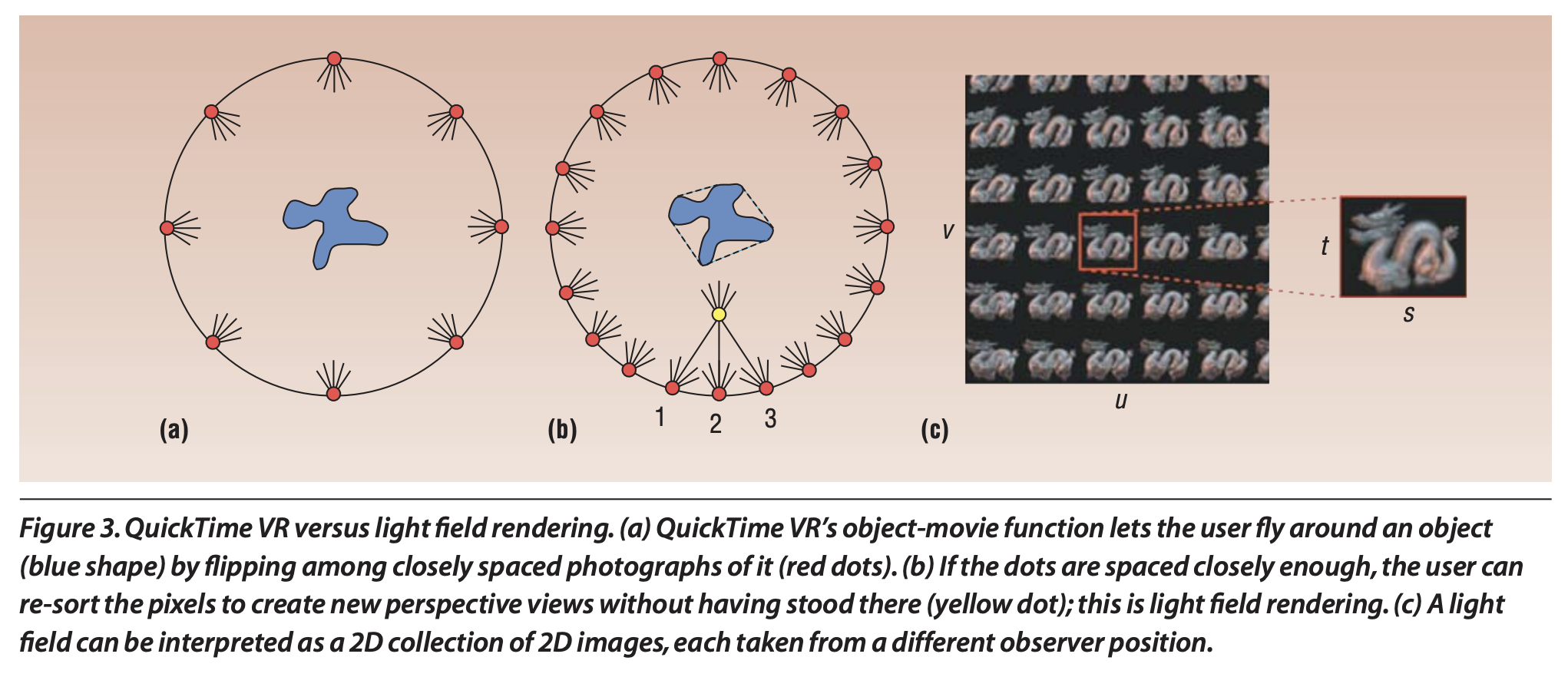

Initiated in the 1990s, this technique employs a strategic array of photographs taken from multiple perspectives around a subject, such as a terra-cotta dragon situated within a large sphere. By methodically capturing the dragon from a hundred distinct points across the sphere's surface, the resultant assembly of images forms a coarsely sampled 4D light field. This method enables viewers to experience a dynamic simulation of circling around the dragon or observing it rotate, grounded in the innovative concept proposed by Eric Chen in 1995. This foundational idea later inspired the object-movie feature within Apple’s QuickTime VR system, allowing users to navigate around the object without altering their perspective, thereby preserving the relative sizes and occlusions within the scene.1

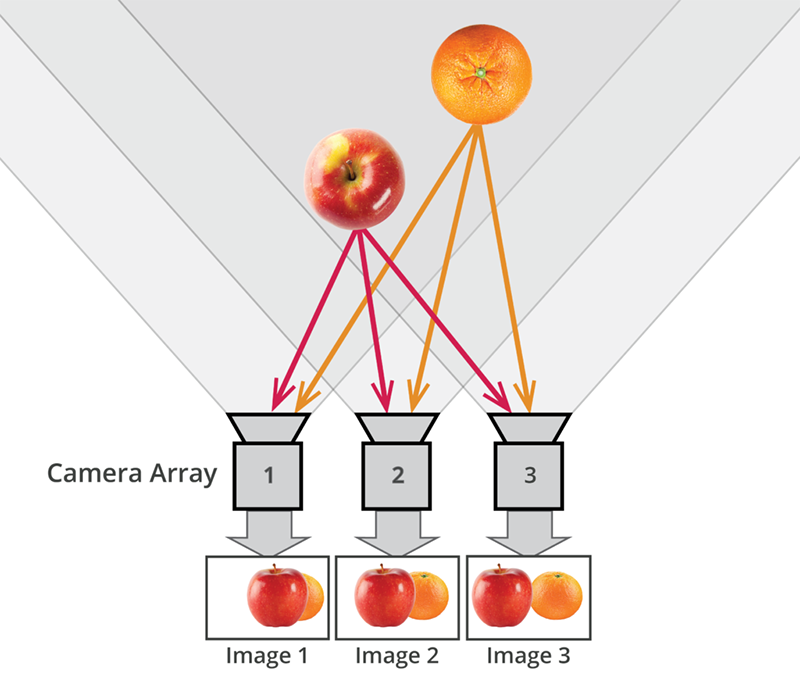

Enhancing the density of the camera positions to a thousand or more allows for a more nuanced exploration towards the object, augmenting the sense of immersion and perspective. In such a detailed setup, individual pixels from one photograph can correspond to pixels in adjacent photographs, facilitating the construction of new, perspectively accurate views from vantage points where no camera was originally placed. This denser sampling, called light field rendering, transforms the light field into a 2D array of 2D images, effectively a 4D pixel array, from which novel views of the scene can be generated by extracting appropriately positioned and oriented 2D slices. The extent and quality of light field rendering hinge on the sampling resolution of the 4D light field, which determines the clarity and fidelity of the rendered images as one moves closer to or further from the original camera positions.

Why is Light Field Important?

Light fields are a significant leap in visual representation, facilitating applications that were previously challenging or impossible with standard photography. These include post-capture refocusing, viewing scenes from slightly different angles without moving the camera, and realistically simulating the depth of field.

Motivation and Distinction from Conventional Image Processing

The motivation behind light field technology is to overcome the limitations of conventional imaging, which flattens the 3D world into a 2D projection, losing valuable information about light's directionality. Unlike traditional imaging that captures a single perspective, light fields record all possible perspectives within a scene, allowing for dynamic post-processing that can change focus, perspective, and even see around objects to some extent.

I mentioned in class that the next cool concept is Gaussian Splatting, a rasterization technique that allows for real-time rendering of photorealistic scenes learned from small samples of images2. Using this technique, you can kind of fly through a scene and see it from different angles, even though you only have a few images of the scene.

Technological Innovations and Methods

- Geometrical Optics Foundation: Focused on objects much larger than the wavelength of light, light field technology builds on geometrical optics principles, where rays serve as the fundamental carriers of light information.

- Plenoptic Function and its Simplification: The plenoptic function's complexity is streamlined by considering only spaces outside an object's convex hull, reducing the function from 5D to 4D, termed the "photic field" or "4D light field". This simplification acknowledges that radiance along a ray remains constant, eliminating one dimension of data.

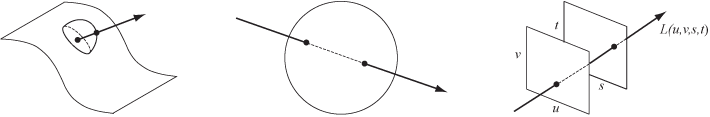

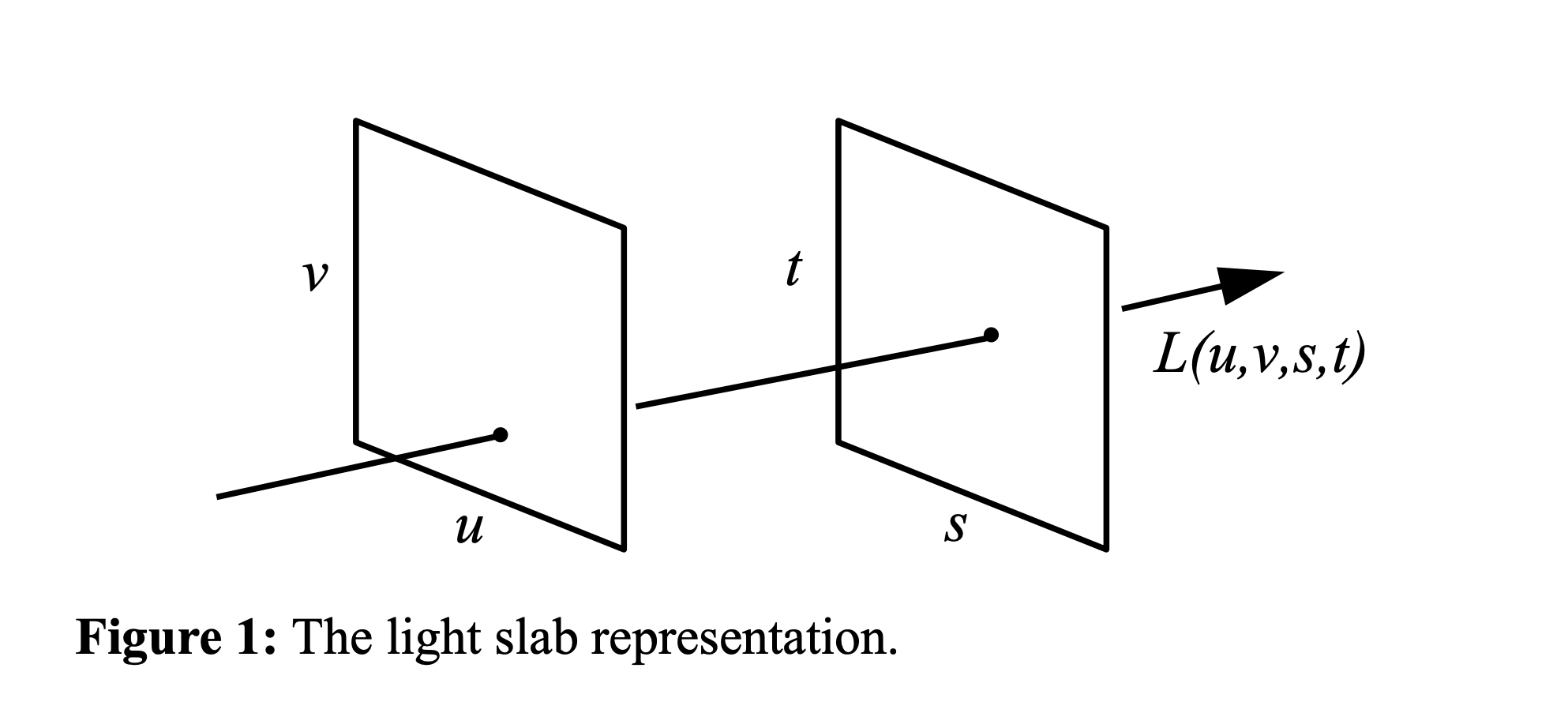

- Parameterization and Practical Applications: Light fields can be parameterized by their intersection with two planes, a method closely related to perspective imaging. This parameterization facilitates light field rendering, an image-based rendering technique that utilizes pre-captured images to convey a scene's geometry on digital displays without needing a 3D geometric model.

Light Fields vs. Traditional Image Processing

-

Dimensionality: Traditional image processing operates on 2D images or sequences of images (videos). Light fields, however, are represented as a 4D function, adding two additional dimensions to account for the angular information of light rays.

-

Post-Capture Manipulation: While traditional image processing techniques allow for a wide range of manipulations, they are fundamentally limited by the information captured in the initial photograph. Light fields, with their richer data capture, enable manipulations that were previously impossible, such as changing the viewpoint of a captured scene or adjusting the focus after the fact.

-

Data Volume and Processing: The increased dimensionality of light fields comes with challenges, notably the significant increase in data volume. Processing light fields requires more advanced computational techniques and algorithms, distinguishing it from traditional image processing which deals with less data and lower-dimensional spaces.

Some alternative parameterizations of the 4D light field, which represents the flow of light through an empty region of three-dimensional space. Left: points on a plane or curved surface and directions leaving each point. Center: pairs of points on the surface of a sphere. Right: pairs of points on two planes in general (meaning any) position.3

Deep Dive into the Plenoptic Function

The plenoptic function, or light field, is a mathematical representation of all the light rays in a 3D scene. It captures the radiance of light along every ray in a 3D space, effectively encapsulating the directionality of light. The plenoptic function is a 7D function, with four dimensions representing the spatial position of the rays and three dimensions representing the direction of the rays.

This is defined as:

Where:

- and represent the direction of the light rays

- , , and represent the spatial position of the rays

- represents the wavelength of the light

- represents time

Light Slab Representation

The plenoptic function can be represented as a light slab, which is a 4D function that captures the radiance of light rays along two spatial dimensions and two angular dimensions. This representation simplifies the plenoptic function by considering only the rays outside the convex hull of the object, effectively reducing the dimensionality from 7D to 4D.

This simplification is based on the principle that the radiance along a ray remains constant, effectively eliminating one dimension of data. This simplification is crucial for practical applications of light fields, as it reduces the complexity of the plenoptic function and makes it more manageable for computational processing.

Light Field Photography with a Hand-held Plenoptic Camera

Let's quickly outline the important equations and concepts related to the paper's methodology and solution to the problem they are trying to solve:

-

Synthetic Photography Equation: The core of the paper's methodology is the Synthetic Photography Equation which enables the computation of photographs as if they were taken by a synthetic conventional camera positioned and focused differently from the acquisition camera. The equation is given by:

where is the irradiance image on the synthetic film plane, represents the light field, is the aperture function, and and are defined based on the relative positions of the synthetic aperture and film planes to the acquired light field planes.

-

Digital Refocusing: Digital refocusing is achieved by manipulating the acquired light field to simulate photographs taken at different focal planes. The equation simplifies under refocusing conditions to:

This enables refocusing on any depth by shifting and summing the images formed through each pinhole over the entire aperture, effectively altering the focus after the photograph has been taken. The transform takes a light field as input and ouputs a picture focused on a specific plane.

-

Moving the Observer: When simulating a movement of the observer's position, the equation simplifies to account for observer displacement without changing the depth of focus. The equation used for moving the observer is:

where represents the original observer position. This allows for viewpoint adjustments post-capture.

Figure from Lytro, the company that popularized light field photography, showing the concept of disparity recording in light field photography.

Discrete Focal Stack Transform

The Discrete Focal Stack Transform enables the computation of 2D photographs from a series of discretely sampled focal planes, offering a practical approximation to the continuous synthetic photography equation for digital refocusing applications. The mathematical formulation of the Discrete Focal Stack Transform is given by:

Here, the terms are defined as follows:

- represents the irradiance at coordinates on the image plane, which corresponds to the pixel intensity values of the refocused photograph.

- denotes the light field function, which encodes the radiance of light rays traveling through every point in space and at every direction specified by .

- signifies the number of discretely sampled focal planes, effectively dividing the depth of the scene into layers, each corresponding to a different focus depth.

- represents the depth of the -th focal plane. This parameter inversely relates to the amount by which light rays are shifted to simulate refocusing on this plane.

- is the aperture function for the -th focal plane, determining the contribution of light rays passing through the aperture at coordinates for each discrete focal plane.

This transform streamlines the process of generating digitally refocused images by aggregating the contributions of light rays across multiple focal planes. By adjusting the depth parameter and weighting by the aperture function , this method simulates how a conventional camera's focus mechanism selectively aggregates light rays to form an image focused at a particular depth.

Math Stuff

-

Summation over Discrete Focal Planes: The discrete summation across focal planes approximates the integration over a continuous depth range in the synthetic photography equation. This approximation is central to the practical application of digital refocusing, as it permits the computation of refocused images from a finite set of captured light field data.

-

Depth and Aperture Parameterization: The parameters and are crucial for the depth-specific aggregation of light rays and the modulation of their contributions based on the aperture size and shape, respectively. Their precise definition ensures that each focal plane's unique optical characteristics are accurately represented in the synthesized image.

Some further explanations of the Synthetic Photography Equation

The synthetic photography equation is formulated based on the geometric optics and the concept of reparameterizing the light field to synthesize new images. The key steps involve:

-

Reparameterizing the Light Field: Given the light field , where represent coordinates on the aperture plane and on the image sensor plane, the equation reparameterizes this light field to account for the synthetic camera's configuration.

-

Focus and Aperture Simulation: By integrating over certain dimensions of the light field, the equation simulates focusing the camera at different depths (by changing the effective aperture size) and adjusting the viewpoint (by shifting the center of perspective).

-

The Role of and : These parameters relate to the distances between the planes involved in the light field capture and the synthetic image plane and aperture. Adjusting these values in the equation simulates moving the focus plane closer or further from the camera, and changing the size of the aperture, respectively.

How It Works

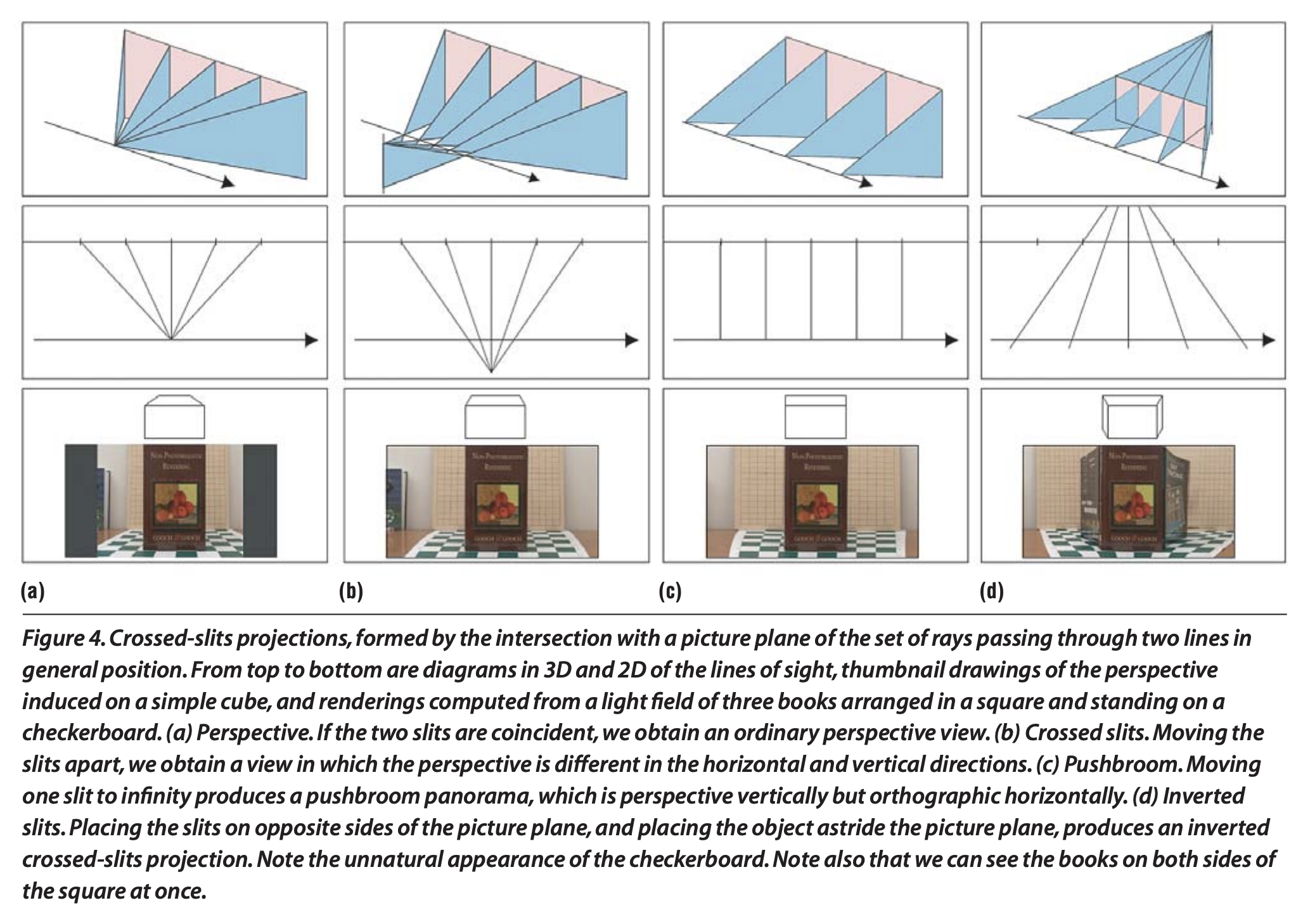

Figure from the paper showing the concept of cross-slit projections and how they are used to simulate different camera configurations.2

-

For Refocusing: The equation essentially sums up contributions of light rays from different angles that would converge at a particular point if the camera were focused at a different depth. This is equivalent to selectively summing parts of the light field that correspond to a virtual camera focused on a different plane than where the plenoptic camera was focused during capture.

-

For Perspective Shifts: By altering the part of the light field that is integrated, you can simulate viewing the scene from a slightly different angle than where the camera was physically located, mimicking a shift in the observer's position.

I hope this all makes sense. I am writing all of this at 4AM and I am not sure if I am making any sense. I will come back to this later and make it more clear.

Here is a sentence with a highlighted phrase in it.